I released a tool to make your avatar move the eyes and mouth with an eye-tracker and facial tracker.

This tool is a Unity editor’s expansion provided as a Unity Package. I am selling it on Booth (VRCFacialOSCAvatarTool - AZW: 荏苒社 - BOOTH). Please buy and download it.

This tool is made for my OSC data sender app AkaneFacialOSC.

Specification

- This tool creates animations from the blend shapes and the bones in your avatar and configures parameters and the animations]

- This tool configures the parameters for the signal from AkaneFacialOSC. However, some other applications can use for the avatars which are set up with this tool because the keys are basically the same as the keys of the tracker’s SDK

- The automatic blinking and lipsync can be disabled

- The other animations users manually play are not disabled

- The switch to toggle the tracking on the expression menu

Requiements

I hope this tool is compatible with any avatars, but there might be unsuitable avatars.

- If you want to move the mouth or eyelids, the avatar must have the blend shapes you want to move

- Generally, the avatars with the blend shapes for ARKit’s face tracking have most of the required blend shapes

- such as a risen right side of the upper lip with the closed jar and the pouty mouth with the closed mouth

- In the case of no specified blend shapes, you probably could use the blend shape for the lipsync instead

- but because the lipsync blend shapes are not made for facial tracking, the mouth can have an unintended movement

- Generally, the avatars with the blend shapes for ARKit’s face tracking have most of the required blend shapes

- if you want to track your gaze, the eyeballs must have bones

- VRCExpressionParameter must have enough space.

- Every tracking data consumes eight memories.

- For example, speaking about eye-tracking, even if you use combined data with both eyes, it still needs 24 memories at least because there are three kinds of data: horizontal movement, vertical movement, and eye blink.

- The existing animations on your avatar can affect facial movement

- If an animation overrides the animations for facial tracking, the tracking will stop moving

- Usually, the animation to change your facial emotion with the hand gesture can change the configuration to play the animations and override the tracker’s animation.

- if the “Write Defaults” values of your existing animations are turned on, switching the tracking might not work well.

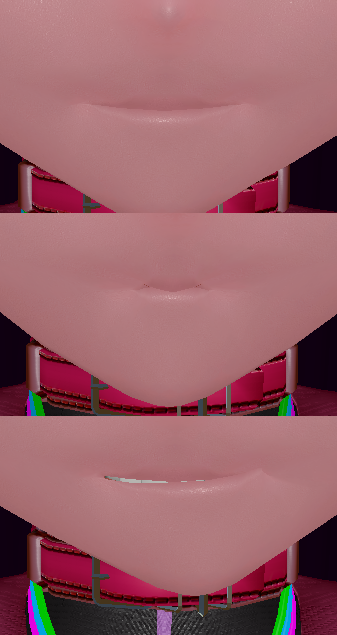

Examples of blendshapes. Top: neutral, middle: Mouth_Pout, bottom: Jaw_Left

How to set up your avatar

Overview

- Import the unitypackage

- Errors occur without VRCSDK3. (You can add the SDK after the tool is imported)

- Select [Tools]→[OSC]→[VRCFacialOSCAvatarTool]

- Select the avatar to be configured

- One of the avatars in the scene will be selected automatically

- Check whether the selected avatar is correct

- Configure the tool

- Choose blend shapes

- Choose eye-tracking

- Run

- Upload your avatar

- Run AkaneFacialOSC

Procedure

1. Preparation

Toolbar

The files are stored in Assets\AZW\FacialOSC\Editor and Assets\AZW\FacialOSC\Textures.

After the Unity Package is imported, you can see a new menu on the toolbar. Then choose [Tools]→[OSC]→[VRCFacialOSCAvatarTool], and the window will be opened.

You can change the language with the dropdown list at the top of the window when you want to change it. The language is used for the UI and the text on the expression menu.

This tool automatically chooses the active avatar in the scene. Please check the selected avatar is exactly the target. If not, please drag-and-drop the correct avatar to the selection box.

2. Configure the tool

Configurations

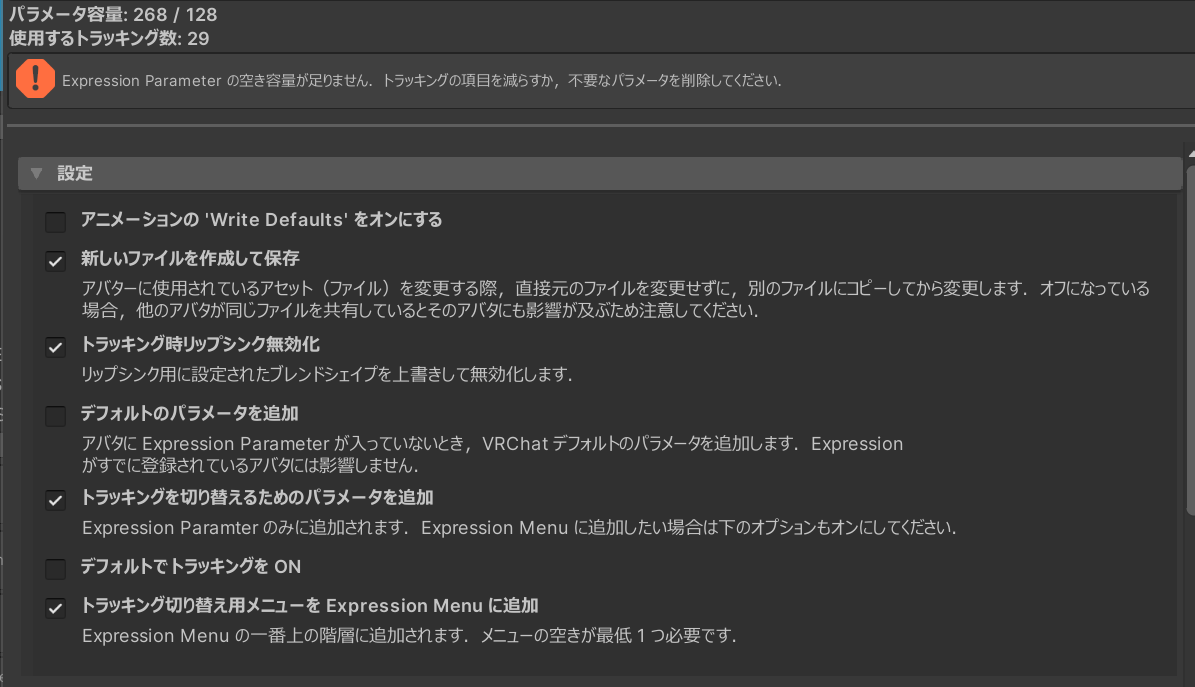

At the top of the window, it shows the memory usage of the VRCExpressionParameter.

It should be 128 or less by the limitation of VRChat.

And you can also see the number of selected tracking elements. If the space is not enough, reduce the tracking elements or delete the existing but unused parameters. The expression parameter is a configuration at the bottom of VRCAvatarDecription. Select the row and click the delete button. Note that float and int value uses eight, and booleans one. Because these parameters are used to switch your animations, including expressions and changing items, please be careful to delete the existing values. It can cause an error on the expression menu or unintended behaviors on the animations.

- Turn on ‘Write Defaults’ on the animator states

- Turning on

Write Defaultson the animator state - Usually, turned off is recommended. However, because of other animation’s effects, there could be some cases that should be turned on

- Turning on

- Save to new files

- Saving the file as another file instead of overwriting

- If turned on, the tool creates new files and modifies them instead of touching the existing files

- It also can make it easy to revert the changes, but the number of files will increase

- If turned off, the tool changes the original files directly. It causes brake another avatar’s configuration using the same files

- If some avatars share the files, you must turn this on

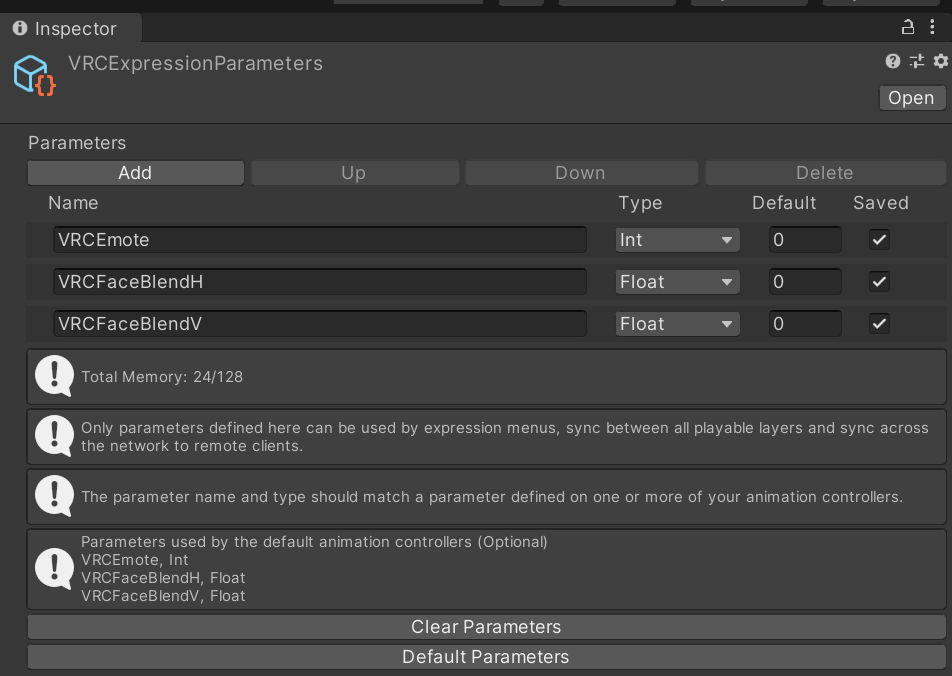

- Add the default parameters

- Enable to add the default parameters of VRChat if the avatar has no custom expression parameter

VRCEmote,VRCFaceBlendH,VRCFaceBlendVwill be added, and they consume 24 memories

- Disable lipsync when tracking is enabled

- Literally, it disables lipsync when tracking is enabled

- If turned off and lipsync is enabled on the avatar, the lip still moves when tracking

- VRCTrackingControl configures it, but other VRCTrackingControls can override the setting very easily

- The state changes whenever another animation switches to animation using this control

- That is, you should modify the Animator in this case.

- Otherwise, disabling hand gestures may work as a work-around.

The last three rows are about switching the tracking.

- Add a parameter to switch tracking animations

- this adds only a parameter

- If you want to add the switch to the Expression menu, enable the last row, “Add to the switch for face tracking to the expression menu.”

- If you want to make your own expression menu, you can turn it off.

- Tracking on as default

- the default value of the parameter will be

enabled

- the default value of the parameter will be

- Add a parameter to switch tracking animations

- Enable to add switches on the expression menu

- one or two spaces is required on the menu

- one is for facial tracking, another for eye tracking

The default values of VRCExpressionParameter

3. Blendshapes

Select the blend shapes to be added.

This tool automatically selects the blend shapes by name and marks the blend shapes to be added.

The memory could not be enough if too many blend shapes were selected.

Use essential only in blendshapes might be helpful to reduce the selections.

Note that selecting the items using the same blend shapes causes wrong behavior, such as Mouth_Smile and Mouth_Sad_Smile.

Refer to the document of AkaneFacialOSC to know the relationship and role of the blend shapes and the keys. And the document of SRanipal SDK by HTC Vive also has information about the blend shape.

主要なブレンドシェイプの項目について

| 項目 | 説明 |

|---|---|

Eye_Left_Blink, Eye_Right_Blink | Blinkikng. Required for eye-tracking. You can use Eye_Blink instead if you won't wink |

Eye_Blink | Blinking of both eyes. Required if you don't use Eye_Left_Blink and Eye_Right_Blink |

Jaw_Left_Right | Horizontal movement of the jaw. Less effect on the expression |

Jaw_Open | Required. The blend shape to open the mouth. Similar to vrc.v_ah |

Mouth_Pout | Required. Pouty but closed mouth. You looks always saying ee without this. You could use vrc.v_ou instead, but something can be different because the mouth is slightly opened in the shape. |

Mouth_Smile | Recommended. The closed and brought up corners of the mouth. Required for smiling. Do not use with Mouth_Sad_Smile simultaniously. |

Mouth_Sad | The closed and brought down corners of the mouth. Do not use with Mouth_Sad_Smile simultaniously. |

Mouth_Sad_Smile | The combine of Mouth_Sad and Mouth_Smile to reduce the memory usage |

Mouth_Upper_Inside_Overturn | The upper lip bit by the lower lip. I think most avatars and users do not use this |

Mouth_Lower_Inside_Overturn | The lower lip bit by the upper lip. I think most avatars and users do not use this |

Cheek_Puff | Suck both sides of cheek |

Cheek_Suck | Puffed cheek |

Mouth_Upper_Up | 口を閉じたまま上唇を上に上げる動きです.通常はあまりかわいい表情には使わないと思います. |

Mouth_Lower_Down | 口を閉じたまま下唇を下に下げる動きです.ウゲーっとした顔には便利です |

Tongue_LongStep1 | The tongue stuck out like th-sound with the closed mouth. Tongue_LongStep2 is alike but more. |

This tool does not use blend trees for blend shapes because it can cause some unintended behavior depending on the structure of the existing animations.

4. Eye bones (gaze)

Select the checkbox to enable the gaze.

The dropdown list configures the layer for the gaze animation, which is the default is Additive. You can change if you want.

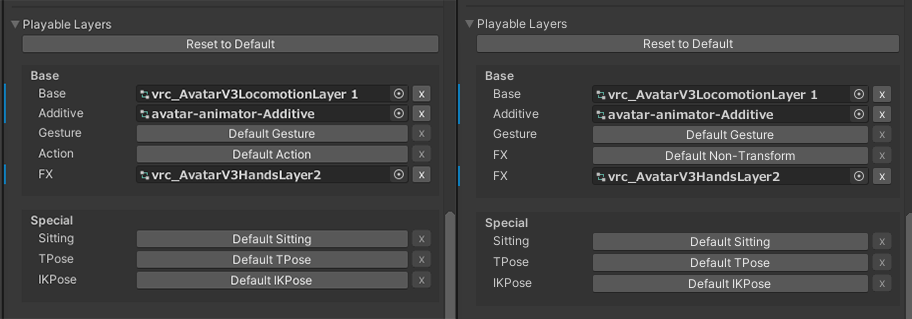

If the Playable Layers on VRCAvatarDescripter is broken (e.g., two same layers exist or a wrong order), the tool cannot run correctly.

Left: correct, Right: wrong, two FX layers

Use isolated signals for both eyes makes your eyes able to move separately.

It can express convergence (crossed) eyes’ movements, but it requires additional 16 memories at most.

Use vertical eye movements could be disabled in some cases to reduce memory usage.

Use horizontal eye movements is usually required.

The tool uses the eye bones configured as the eyes on Unity humanoid system on humanoid avatars.

The angle of the gazes is depended on the muscle of the humanoid avatar configuration. You can also adjust the angle on the slider on the tool.

On VRChat, the animator must use VRCTrackingControl to move the bones managed by the VRChat, including the eye bones.

However, it will be overwritten by another animation’s VRCTrackingControl.

You can change and fix your animation to keep it enabled.

Or disable the hand gesture and prevent using the other animations as a workaround.

5. Run

Please take a backup before running to be safe.

If you publish your avatar, you must finish up this step.

The following phases are on the users. Therefore, you do not include this tool in your avatar when distributing.

6. Upload

Upload your avatar as same as the other avatars.

Then, enable OSC function.

Turn on [Expression Menu]→[Options]→[OSC]→[Enabled] on the expression menu.

If you upload the avatar as an existing avatar (that is, when you overwrite an avatar), VRChat might not receive OSC signals (13th March 2022). In this case, you need to restart the VRChat application. It is a problem with VRChat.

And if you change the parameters for OSC, you must reset OSC configurations on VRChat. Select [Expression Menu]→[Options]→[OSC]→[Reset Config] on the expression menu.

OSC configuration on VRChat

7. Preparing trackers

When you use a Vive Facial Tracker or a Vive Pro Eye, you need to install SRanipal Runtime.

You can get it on the HTC Vive website for developers (https://developer-express.vive.com/resources/vive-sense/eye-and-facial-tracking-sdk/download/).

If you use Droolon Pi1, aSee VR Runtime is required. You can install it on Pitools.

8. AkaneFacialOSC

You need to do this step whenever you use facial/eye-tracking.

AkaneFacialOSC (https://booth.pm/ja/items/3686598) sends your facial/eye-tracking data to VRChat application with OSC.

Download it and run FacialOSC.exe.